Install Turso Limbo on Linux

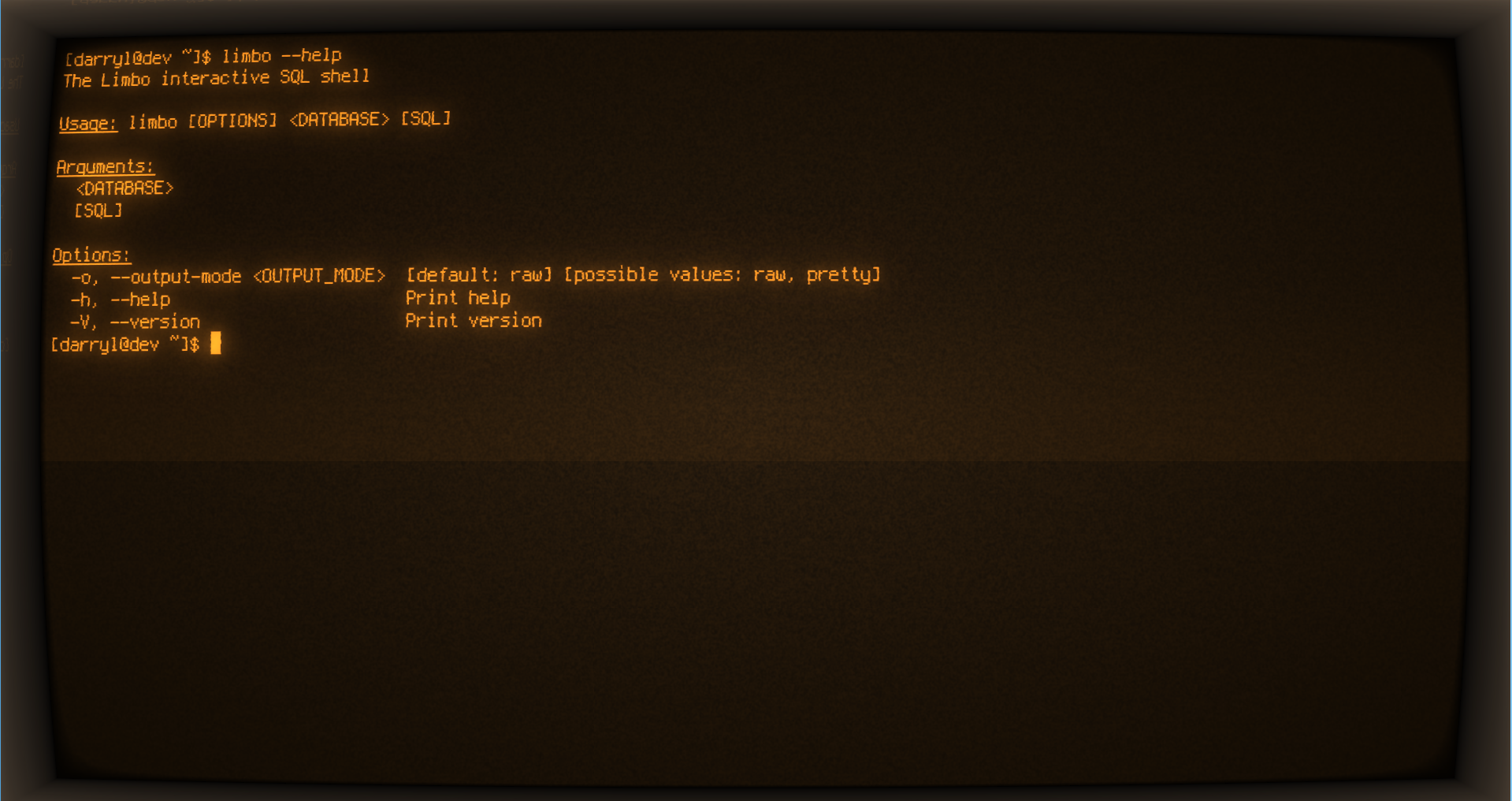

What is Limbo? According to the Limbo GitHub project, “Limbo is a work-in-progress, in-process OLTP database management system” developed by Turso, compatible with SQLite. You can think of this another version of SQLite written in Rust. You can read more about Limbo here. Installing Limbo. To install Limbo all you need to do is run … Read more